Meta’s recent launch of the Llama 3 model. It’s Meta’s most advanced open-source model to date, delivering impressive performance across benchmarks like HumanEval, GPQA, GSM-8K, MATH, and MMLU. Llama 3 is available in two versions: an 8 billion parameter model tailored for client applications and a 70 billion parameter model optimized for datacenter and cloud environments.

Great news for AMD users! If you have a Ryzen™ AI1-based AI PC or an AMD Radeon™ 7000 series graphics card2, you can now run Llama 3 locally without any coding expertise.

AMD’s Ryzen™ Mobile 7040 Series and Ryzen™ Mobile 8040 Series processors come with a Neural Processing Unit (NPU) designed specifically for handling AI tasks. With up to 16 TOPs of performance, the NPU enables efficient execution of AI workloads. Additionally, AMD Ryzen™ AI APUs feature a CPU and iGPU for managing on-demand tasks.

1. Download the correct version of LM Studio:

| For AMD Ryzen Processors | For AMD Radeon RX 7000 Series Graphic Cards |

| LM Studio – Windows | LM Studio – ROCm(TM) technical preview |

2. Run the file.

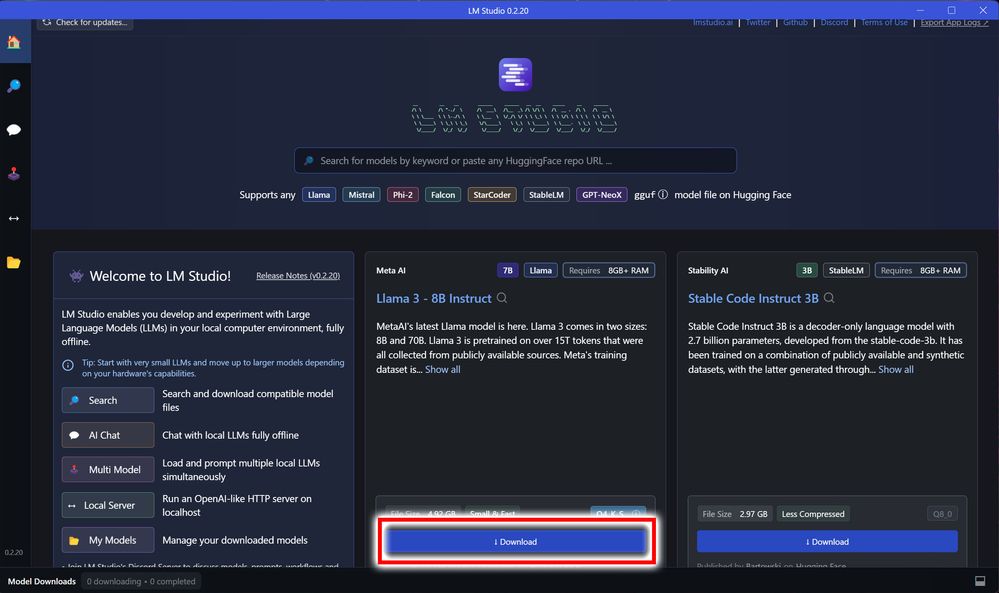

3. Click the “Download” button on the Llama 3 – 8B Instruct card.

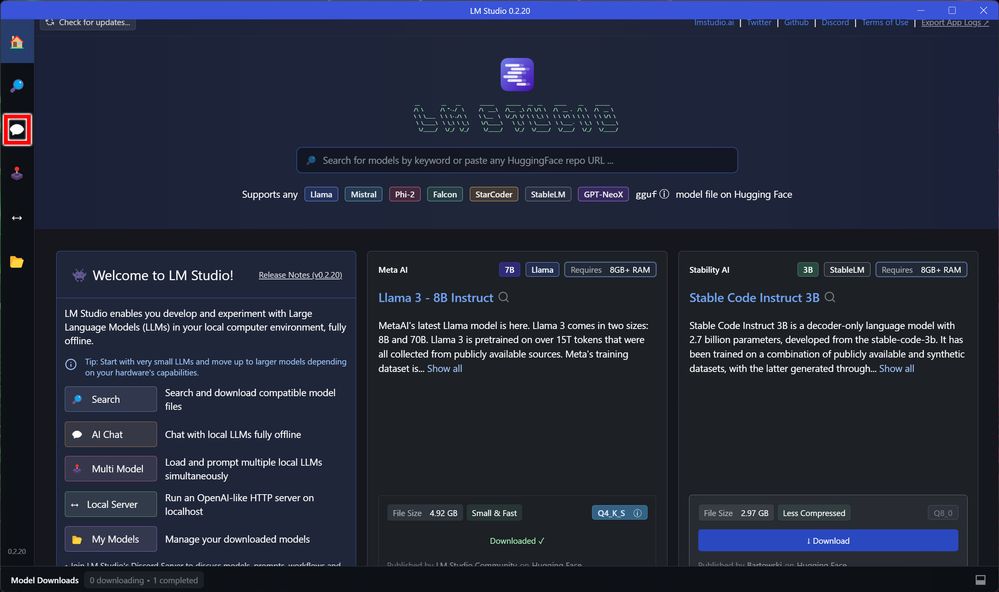

4. Once downloaded, click the chat icon on the left side of the screen.

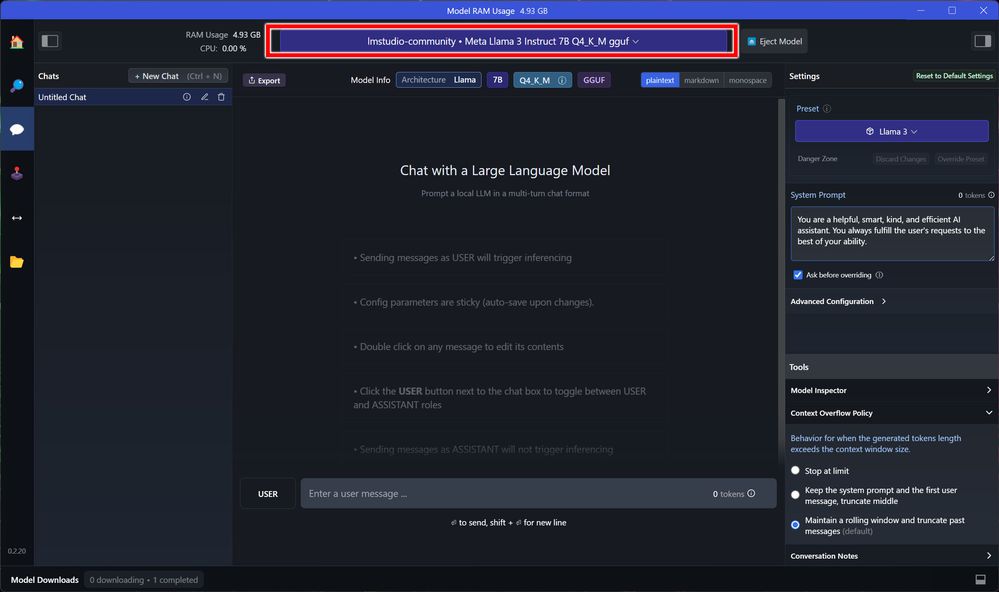

5. Select Llama 3 from the drop down list in the top center.

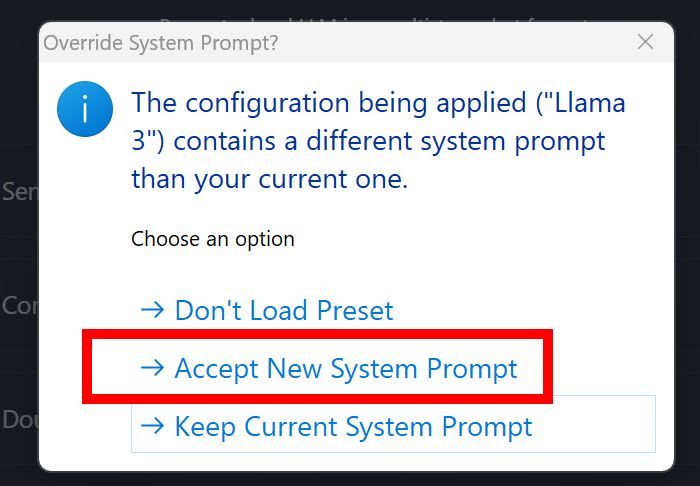

6. Select “Accept New System Prompt” when prompted.

7. If you are using an AMD Ryzen™ AI based AI PC, start chatting!

For users with AMD Radeon™ 7000 series graphics cards, there are just a couple of additional steps:

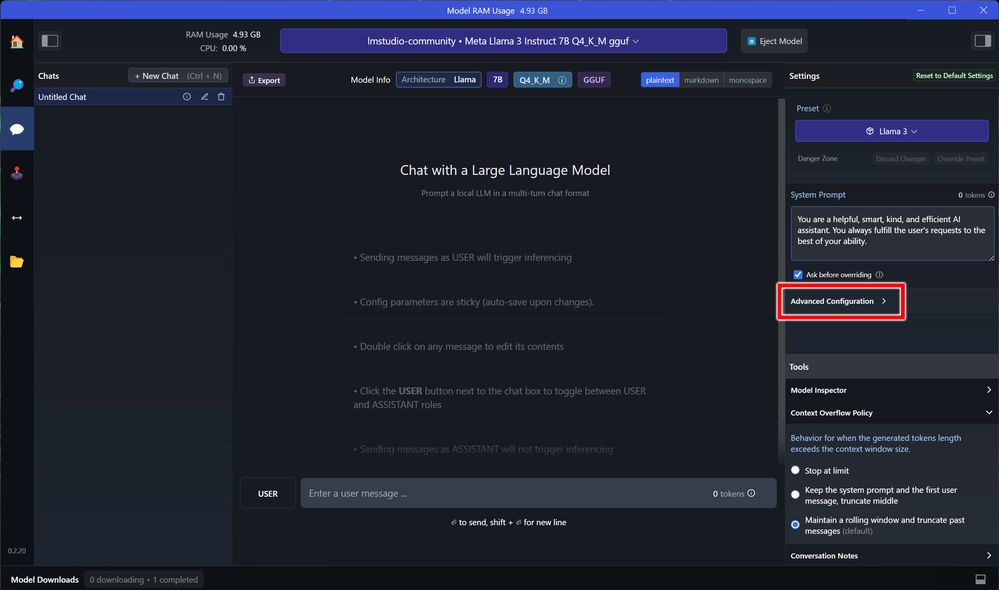

8. Click on “Advanced Configuration” on the right hand side.

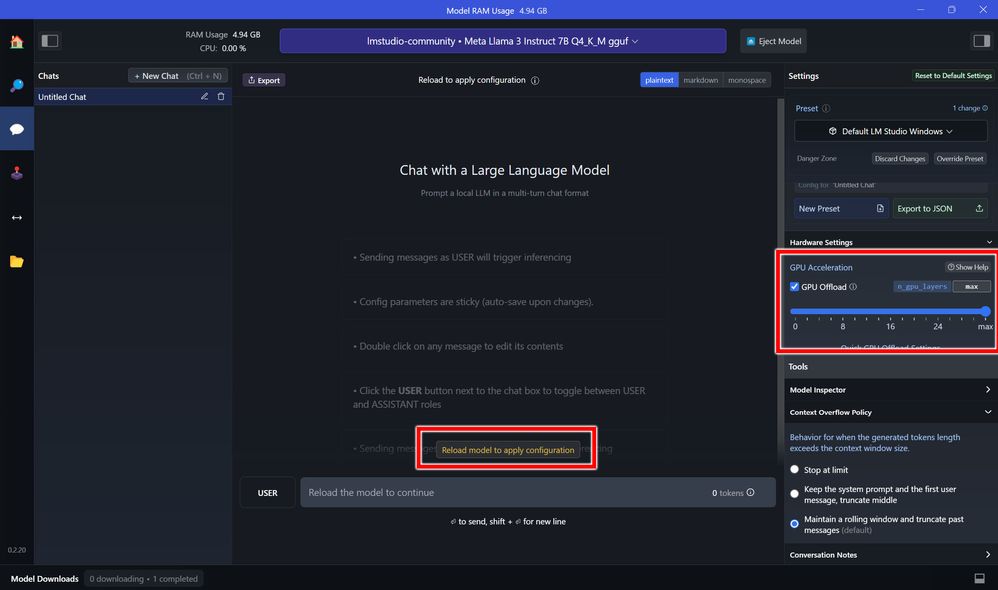

9. Scroll down until you see “Hardware Settings”. Make sure “GPU Offload” is selected and the slider is all the way to the right (Max). Click on “Reload model to apply configuration” if prompted.

10. Start chatting with Meta’s new Llama 3 chatbot.