Qualcomm Technologies, Inc. and Meta announced a collaboration to optimize the execution of Meta Llama 3 large language models (LLMs) directly on smartphones, PCs, VR/AR headsets, vehicles, and more. The ability to run Llama 3 on devices offers significant advantages, including superior responsiveness, enhanced privacy, improved reliability, and more personalized experiences for users.

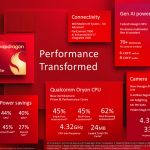

The collaboration aims to democratize access to generative AI capabilities by enabling OEMs and developers to make Llama 3 accessible on devices powered by upcoming flagship Snapdragon® platforms. The utilization of Qualcomm Technologies’ powerful heterogeneous computing architecture, which seamlessly leverages our industry-leading CPU, GPU, and NPU, in conjunction with a cutting-edge memory architecture, empowers customers, partners, and developers to maximize application performance, thermal efficiency, and battery life.

Developers will be able to access the resources and tools to run Llama 3 optimally on Snapdragon platforms via the Qualcomm® AI Hub, which currently offers approximately 100 optimized AI models. The Qualcomm AI Hub reduces time-to-market for developers and unlocks the benefits of on‑device AI for their apps