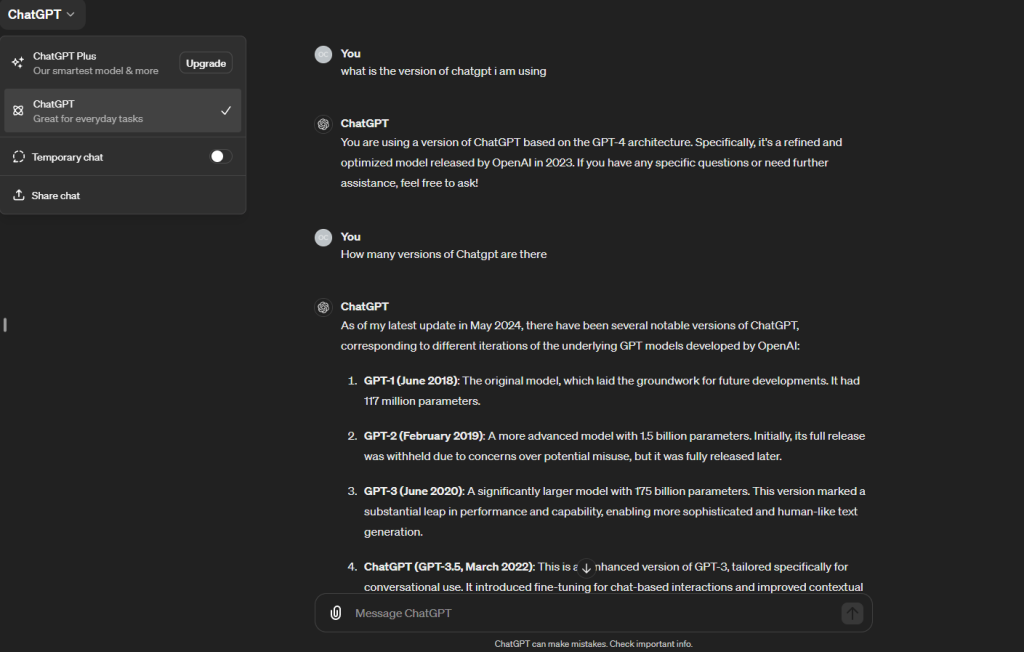

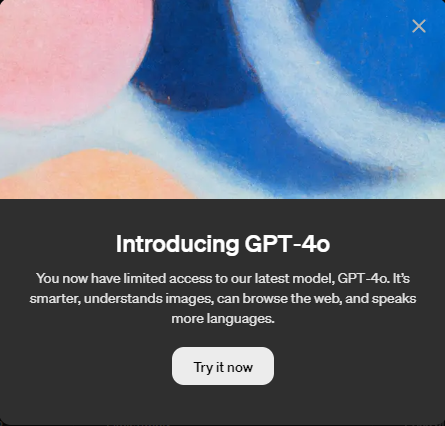

OpenAI has recently announced the introduction of GPT-4o, which is an advanced multimodal voice assistant. This new AI model integrates text, vision, and audio capabilities, making it free for all ChatGPT users1. It maintains the basic functions of ChatGPT, such as responding to user questions and requests, but now also includes voice interaction.

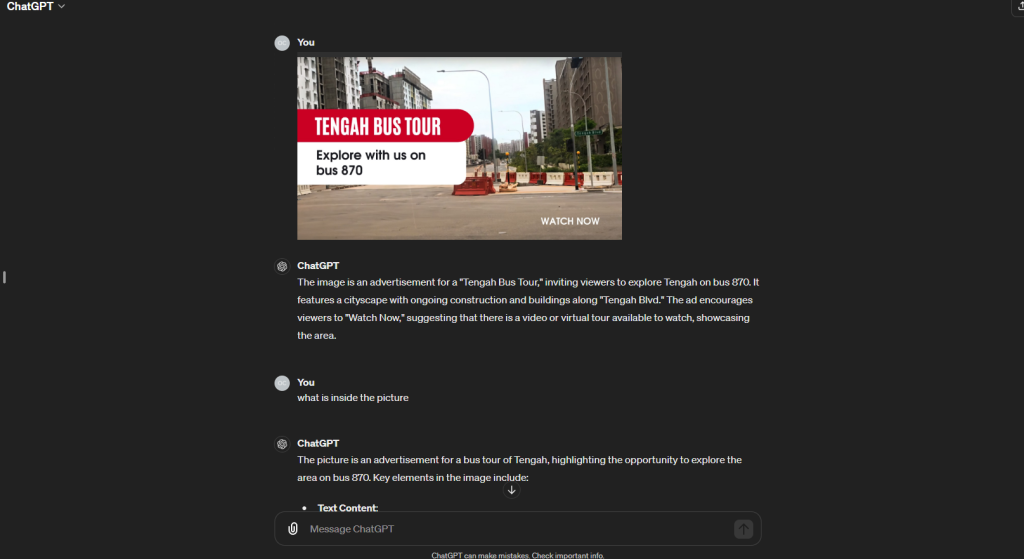

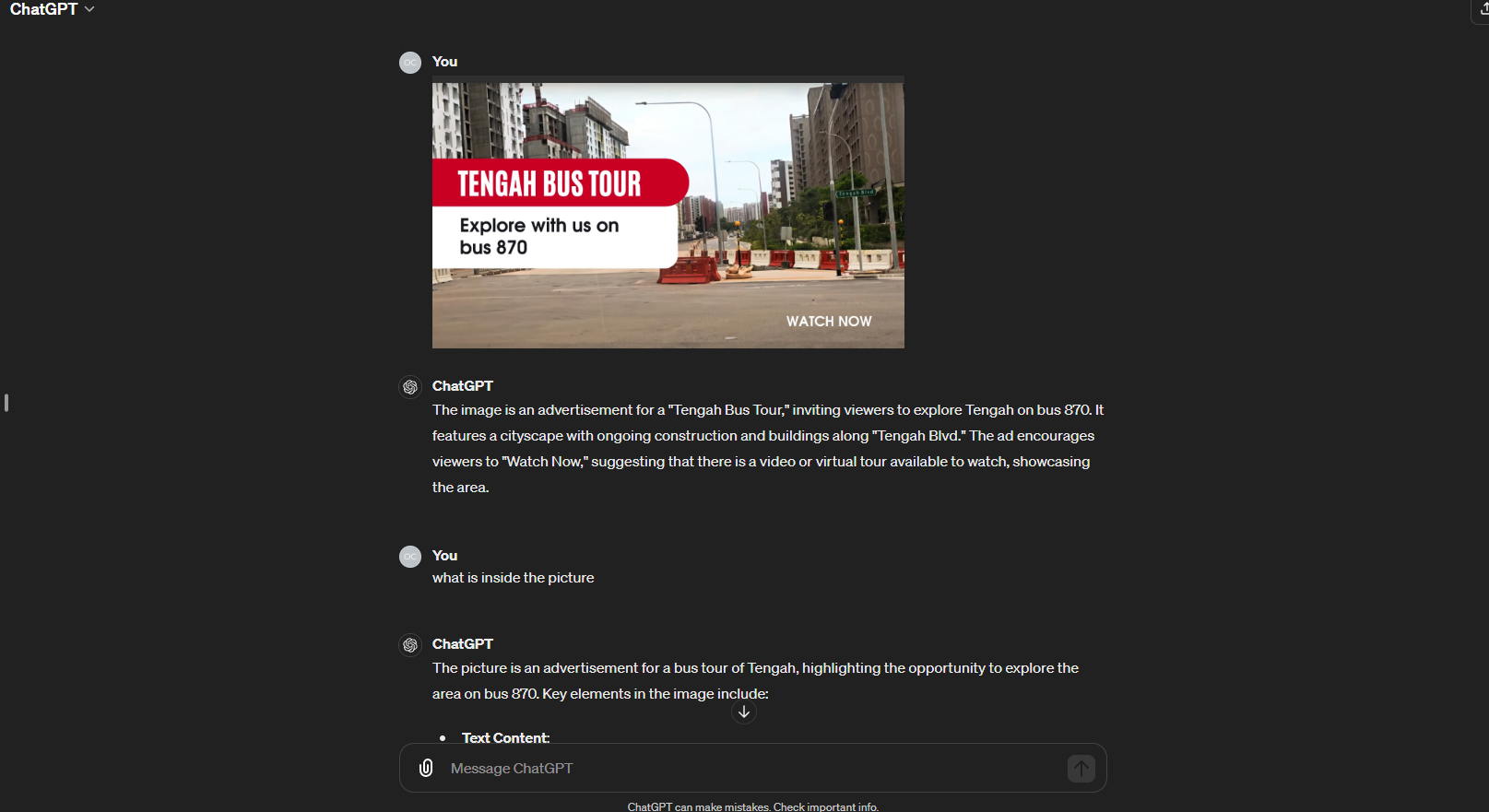

GPT-4o is designed to be much faster and improves upon the capabilities of its predecessor across text, voice, and vision. For instance, it can now understand and discuss images shared by users, translate menus in different languages, and provide information about the food’s history and significance.

In the future, GPT-4o will allow for more natural, real-time voice conversations and the ability to converse with ChatGPT via real-time video. OpenAI plans to launch a new Voice Mode with these capabilities in an alpha version soon, with early access for Plus users as they roll out more broadly.

The language capabilities of GPT-4o have also been improved in terms of quality and speed, supporting more than 50 languages. It’s a significant step forward in making advanced AI tools more accessible and beneficial to a wider audience. #openai #chatgpt #gpt-4o